![]()

![]()

![]()

Does CGC not have the exact same problem now?

Similar yes

I’ll play the devil’s advocate:

Sure, they will most likely update (and therefore hopefully improve) their grading accuracy over time. But it depends on how much % of total graded cards it affects. Is this some detection “bug”, that affects 1 in 1 million cards? Then it doesn’t matter that the grade would change, in my opinion. Human graders miss stuff all the time AND they are inconsistent. At least the detection logic gives the same result every time and can be improved - you can’t patch a human having 3 hrs of sleep and making a mistake.

What worries me more is the chance to brick the results every time they touch the logic or adjust the imaging setup - lighting, background, cameras/lenses. I wonder how are they doing the testing, given the fact you can say every card is unique. Ideally you would store every frame you take of every card being graded, along with the results and re-run the grading with the new version of the software on all previously graded cards and compare results. Where would you draw the line, that it is acceptable for x % of cards to change their grade in the new version? Would anyone inspect these grade shifts to check if they are valid or a new bug? What if they’re not able to tell?

Computer assisted grading - great idea in my opinion - depending on how it is utilized. I can imagine processes where it causes more trouble than it helps.

Fully automated grading - house of cards.

Simple counterpoint: we have no idea how many cards are affected, this one was only caught because it was advertised online. This exact ‘bug’ could’ve happened dozens of times before with TAG being none the wiser.

Who knows if and how many other bugs there are they don’t know about because they aren’t thrown into the spotlight?

I think eventually software could easily develop to a point where humans are no longer required as graders, but the path to get there is computer assisted grading (i.e. when the false positives and false negatives no longer occur). Grading is a lot less complex and has fewer variables than many a job that is currently being automated (it’s just a lot more niche).

TAG already is machine first, when I think the machine should be second for another 10 million or so cards to work out the ‘bugs’. Which may not be realstic for a company that may not have the capacity and market share.

I like the look of the slab. And the UV resistant protection is a big plus for me.

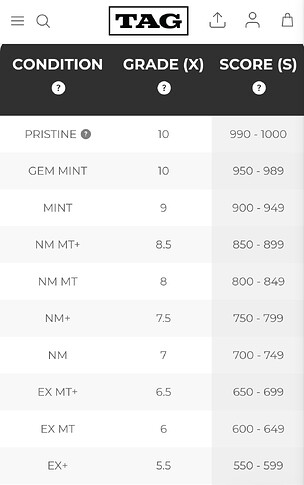

The issue I can foresee is rooted in their 1000-point precision. It gives the illusion of objectively and machine precision but ultimately grading is a subjective task.

Their grade scale has 100x more resolution than PSA. Imagine BGS with 2 decimal points in each subgrade. I don’t think that level of resolution is stable. Meaning if you do a technical replicate of just rescanning the card from scratch I would expect a different result. If you change the machine settings a bit, I would expect the results to really change. To clarify, like 10 points out of 1000 I would consider a moderate change.

The second layer is the computational model that determines the grade. If it’s machine learning- based, its extremely hard to keep it consistent because how it actually works in detail is not known. So any update to the model will have unknown consequences to previously graded cards.

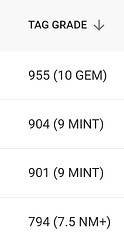

The primary issues are cards that are on the boundary between grades. Funny enough, all 6 illustrators are 6 points away from a different grade (0.06 of a grade). Even a very tiny change to any parameter could shift the grade on cards like these. The more precision your grading has, the more consistent everything has to be

I agree 100%. By moving a grade, I mean the thing on the label: 9.5 → 9.0, 8.5 → 9.0 etc. I agree that letting the users know that its not 8.5, but actually ![]() 890 is a stupid move, because that is so granular, that they’ll definitely run into problems with this.

890 is a stupid move, because that is so granular, that they’ll definitely run into problems with this.

I think that biggest issue is any change to the equipment - cameras/light setup/background/position of the lens etc., because once they change it, it affects:

a) the detection logic (model, any pre/post processing etc.).

But more importantly b):

if you make some bigger change to the setup, you can’t reliably do any “backtesting” to validate the new detection logic, since the data (images) you have, have been taken under different conditions.

If they would keep the setup the same (which is impossible), then they can always roll out a new version of the software, test it against all of the already graded cards and see that 500 out of 200.000 cards have moved their grades, manually check these changes to verify they are valid (and that they’re not introducing some new bug) and then move from there.

If they find out that they should take photos from a little bit different angle to discover some “new” defect, they’re screwed, because you can’t magically take new photos of the already graded cards under this new angle. And then you run into the thing you mentioned: this card is X in this version of TAG, meanwhile it is Y under the latest version of TAG.

Yep I agree with all of this! But if they did a good job training their machine learning model (assuming they use AI) it should generalize pretty well across set-up conditions. The problem being it’s hard to impossible to know how the set-up conditions will affect the grading (because the models are a black box) ESPECIALLY at the resolution of 0.01 grade points of a completely subjective scale.

My thoughts are…collectors don’t care that much about the minutiae of their card grade (it’s why subgrades at CGC were like 10% of all graded cards or something).

Plus if they set a standard via their “system” (whatever that actually is) then likely vintage will be very harshly graded with them.

99% of wotc 10s have issues so if their system compares to modern I can imagine vintage collectors likely will just stick with PSA as they may be more understanding that vintage is never perfect.

The way they are being pumped right now by people claiming to have no financial interest is also extremely suspicious.

As others have said, most likely they are looking for a buy out.

There has been a lot of noise lately and not really because a bunch of people are organically using them instead of other companies. I don’t know what to make of it but it doesn’t persuade me to use their product.

I’m all for good competition but it does feel very “pumpy-dumpy”

it makes total sense they would be aiming for an acquisition. the tech is really cool, and if it’s as consistent as they say, then companies like PSA or CGC would want to cut costs + increase TAT with tech like it.

personally i wouldnt even think about using any grading company that doesn’t qualify for ebay auth… thats just me tho

I’m curious if that was their actual approach rather than something more hand-tunable. I wouldn’t be that surprised if the grading function was more like a bunch of objective measurements (should be highly repeatable) thrown into some multidimensional curve fit equation that matches grades from a competitor (I’d pick PSA if I were them).

I think overall I like the idea of machine grading but I personally would wait like 5 years to see if they can survive the industry / stabilise grading scale / iron out important issues a new grading company will face.

Maybe by then they’ll be the biggest of all the grading companies haha, though I do think a buy out is the most likely option for them in the next couple of years. Who knows!

Yep, it’s possible but I have no idea. A set of basic functions might be repeatable if given the exact same input twice but is going to be way more sensitive to small changes in input or if the conditions deviate too far from what the programmer expects.

If they have a laser or some device they think can measure surface height to 0.001", and again for centering to 0.001", corners, edges, etc, it would be fairly easy I believe to repeat a value 1-1000. I’m saying their device is spec’d to measure 0.001", so any noise in it has to be less than that. If placing the same card in the measurement area yields different results, then obviously something about the setup is not repeatable enough.

I am just thinking about how I would do it, and so far that is relying on some lab quality measurement devices + some repeatable way to align for measurements, and finally the grading function actually derived from tens of thousands of real measurements and desired final grades, so that I get the RMS best fit for the noise in my real system.